December 13, 2024

In the evolving world of artificial intelligence, understanding the distinction between ML vs LLM is very important. Machine Learning (ML) has been an integral part of AI for years, solving specific tasks like prediction and classification. Meanwhile, Large Language Models (LLMs) have emerged as innovative tools that excel in processing and generating human-like text. But what truly sets them apart? This blog will help you understand the core differences, highlighting their unique strengths and applications, and why both are essential in today’s AI landscape.

Machine learning (ML) is a branch of AI that focuses on developing algorithms that can learn from data. Unlike traditional programming, where explicit instructions are coded, ML systems identify patterns and make predictions or decisions based on the data they process.

ML has powered a variety of applications, from recommendation systems to fraud detection. However, as technology evolves, new models like LLMs have emerged to address specific challenges in language and text understanding.

Large Language Models (LLMs) are advanced machine learning models specifically designed to process and generate human-like text. Examples of LLMs include GPT (Generative Pre-trained Transformer) models like GPT-4. LLMs are trained on massive datasets containing diverse information, enabling them to understand and generate text with remarkable accuracy and coherence.

LLMs are a key player in modern NLP solutions (Natural Language Processing), offering capabilities like summarizing content, translating languages, and even engaging in human-like conversations. They’re particularly useful in applications where understanding context and generating meaningful responses are important.

Large Language Models are deep learning-based systems designed to handle vast and diverse datasets. Their primary features include:

ML vs LLM represent two distinct approaches in the AI landscape. While ML models rely on training for narrow tasks using structured data, LLMs, such as OpenAI’s GPT series, are designed to process and generate human-like text, often requiring massive datasets and computational power. The conversation surrounding LLM vs NLP models highlights the evolution of AI from basic text analysis to sophisticated language understanding and generation.

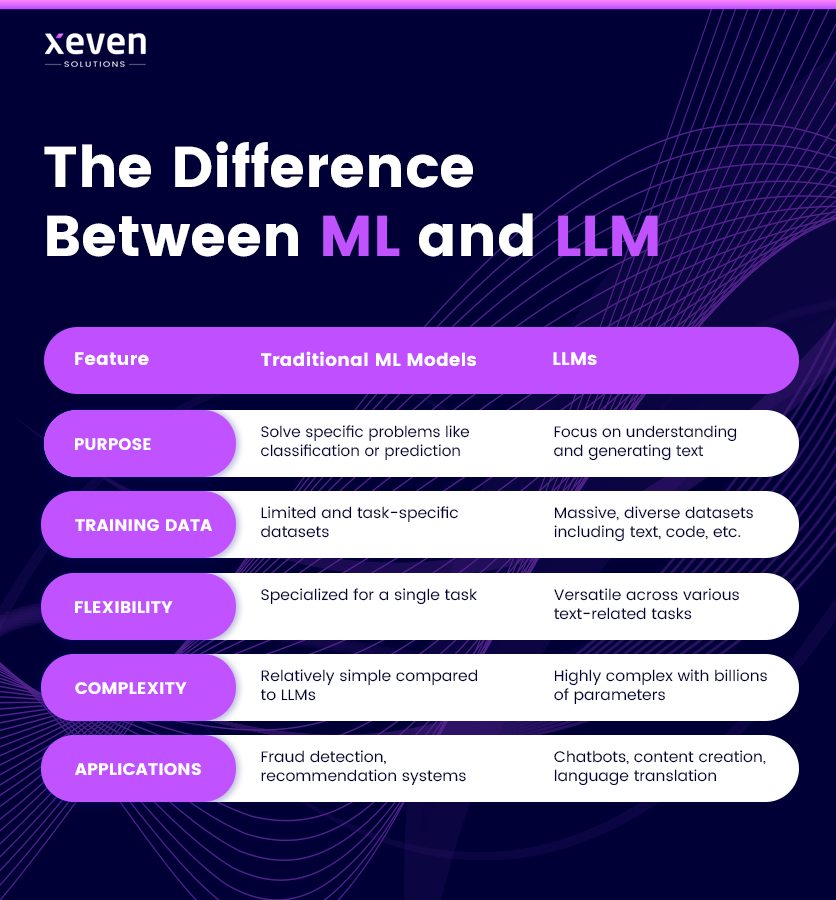

Purpose: Traditional machine learning models are designed to solve specific problems, such as classification or prediction tasks. For instance, they might determine whether an email is spam or predict the likelihood of a customer making a purchase. On the other hand, LLMs are focused on understanding and generating text, enabling them to perform a wide range of text-related tasks like writing essays, answering questions, or conducting conversations.

Training Data: ML models are typically trained on task-specific datasets that are often smaller in size and focused on structured data, like customer transactions or sensor readings. LLMs, however, are trained on vast and diverse datasets that include text from books, websites, and other sources, allowing them to handle a broader range of topics and tasks.

Flexibility: ML models are generally specialized for a single task. For example, a model trained to recommend products will not perform well in detecting fraud. LLMs, in contrast, are highly versatile and can tackle various text-related challenges, from summarizing articles to coding assistance, all without needing extensive retraining.

Complexity: Traditional ML models are relatively simpler in terms of architecture and size. They often have fewer parameters and require less computational power compared to LLMs. LLMs are highly complex systems with billions of parameters, enabling them to understand subtle nuances in language and produce human-like outputs.

Applications: ML models are widely used in domains like fraud detection, recommendation systems, and predictive analytics, where structured data and clear goals are present. LLMs excel in applications requiring deep understanding of language, such as chatbots, content creation, and real-time language translation.

Traditional ML models are effective in domains where structured data is abundant and tasks are narrowly defined. On the other hand, LLMs shine in text-heavy applications, leveraging their vast knowledge base to address nuanced challenges.

While Generative AI applications and LLMs often overlap, they are not identical. Generative AI refers to a broader category of AI systems capable of creating content, such as images, videos, and text. LLMs are a subset of generative AI focused exclusively on text-based outputs.

For example, tools like DALL-E (image generation) and ChatGPT (text generation) are both generative AI systems. However, ChatGPT is an LLM designed for natural language processing tasks, making it part of the generative AI ecosystem but specialized in text-related challenges.

In the debate of ML vs LLM, it’s clear that both have distinct roles in the AI landscape. Traditional machine learning models excel in structured, task-specific applications, while LLMs dominate in understanding and generating human-like text. As AI continues to evolve, the synergy between ML and LLM technologies will unlock even greater potential for businesses and society.

Whether you’re exploring the power of Generative AI vs LLM or looking for AI consulting firms to integrate cutting-edge solutions, understanding these models can help you utilize their full capabilities effectively.